November 04, 2025

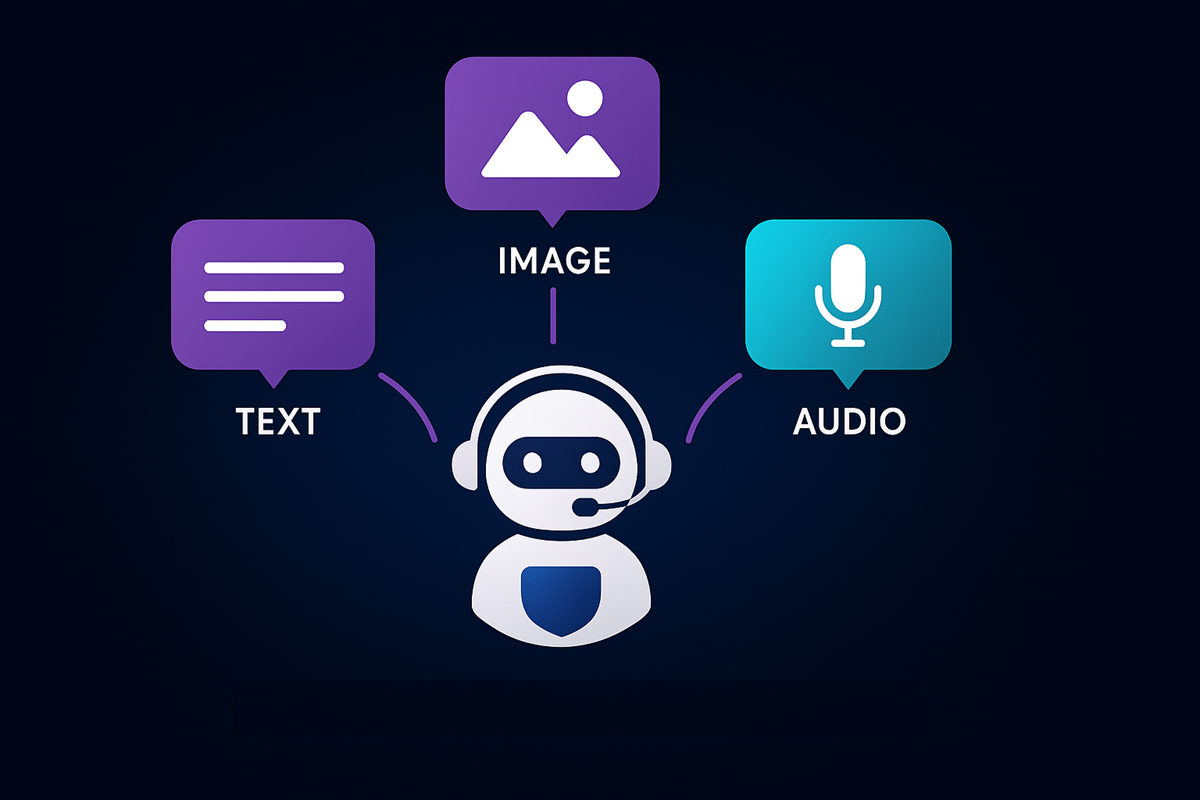

Crescendo Offers A CX First: Multimodal AI With Simultaneous Voice, Text and Visual Interactions

As customer experiences become less about the written and typed word, consumers will expect to communicate with brands in ways that suit them. Enter Crescendo, with an AI that is happy to communicate in text, verbal or visual methods.

As part of the company’s contact centre offering, which added Amazon’s Nova Sonic voice support earlier in the year, it now goes full multimedia enabling consumers to engage with brands in the most practical or beneficial way.

For example, a chat can start off on the keyboard and then swap to an image (say a faulty product or one that’s the wrong colour) and then receive audio instructions on how to solve a problem or what steps to take next while they are focused on a support page.

In broader use, online shoppers can upload selfies to get an instant, AI-powered skin tone match or the right shade of paint to match a furniture photo, Driver can describe a dashboard warning via chat and have AI connect to the vehicle system, talk them through a fix or provide directions to the nearest garage.

AI is Happy to Talk

Crescendo’s first AI-native contact centre aims to deliver a new standard of customer experience, tying success to real outcomes. That foundation has evolved into a breakthrough that marks the arrival of a next generation of AI for CX – the transition from rigid, scripted systems, to fluid, multimodal intelligence.

“We see huge potential in Crescendo’s Multimodal AI to change how our customers interact with Veer,” said Nick McKay, CEO of Veer, designers of premium all-terrain gear. “If something happens on the trail, parents can instantly chat, talk, or share a photo of their Cruiser so we can help with no restarts. It’s the next step in making every experience feel as effortless as our products — and a glimpse of how shopping and support are evolving together.”

The Crescendo Secret Sauce, No Workflows

Most AI systems in customer service today rely on workflows: Rigid, pre-programmed scripts that dictate how conversations unfold. These workflow dependencies make it difficult to scale or adapt, forcing organizations to manually define logic for every possible scenario.

Crescendo takes a fundamentally different approach. Its AI Assistants work without the need for workflows, learning directly from the same operational and policy content used by human associates.

Instead of being programmed to follow steps, the AI understands company policies, product data and procedures directly from existing knowledge content. And by grounding every response in verified knowledge, Crescendo’s AI prevents hallucinations, achieving 99.8% accuracy.

This workflow-free approach allows Crescendo to deploy sophisticated AI Assistants faster, maintain them with less manual effort, and ensure that both AI and human agents operate from the same, accurate information.

For customers already using the Crescendo AI Suite, adding Multimodal AI to their existing solutions can take as little as two weeks by leveraging the same knowledge base and backend integrations.

As for the claim for a first triple-threat chatbot, research shows ElevenLabs does text and speech, while others offer the various components to build a stack. But this does look like the first commercial offering.